Sep

Siri vs. Gemini: How Apple and Google’s AI Overhauls Are Changing Smartphones in 2025

In 2025, your phone isn’t just smart—it’s actually intelligent. Artificial intelligence (AI) has officially stepped into our daily lives, and it’s transforming the way we interact with our most personal gadget: the smartphone. Both Apple and Google have rolled out major updates to their AI assistants—Siri, now powered by Apple Intelligence, and the Google Assistant, now backed by the Gemini AI model. These aren’t just cosmetic upgrades; they’re full-blown intelligence overhauls that promise to make your device faster, more helpful, and far more intuitive.

But what exactly do these changes mean for you, the everyday user? And which tech giant is offering the better experience?

Let’s dive in.

1: Apple Intelligence and The Rebirth of Siri

For over a decade, Siri has been the digital assistant that many iPhone users tolerated more than relied upon. Once groundbreaking when it first appeared on the iPhone 4S in 2011, Siri quickly became outdated in the face of stiff competition from Amazon’s Alexa and Google Assistant. Where others grew smarter and more conversational, Siri remained stiff, limited, and often misunderstood even the simplest requests.

But fast-forward to 2025, and Apple has finally given Siri the upgrade it so desperately needed—introducing Apple Intelligence, a sweeping integration of generative AI into the iPhone, iPad, and Mac. With this overhaul, Siri is no longer just a voice assistant; it’s a powerful AI companion embedded deeply across Apple’s ecosystem.

Launched alongside iOS 18, iPadOS 18, and macOS Sequoia, Apple Intelligence brings a ChatGPT-style AI experience right into your pocket—enhancing Siri’s capabilities to a level we’ve never seen before on an Apple device.

What Can The New Siri Actually Do?

Apple’s vision for Siri goes far beyond setting alarms or sending texts. With Apple Intelligence powering it, Siri has learned to be contextually aware, emotionally intuitive, and far more helpful in your day-to-day life.

Context-Aware Responses

The new Siri understands what you mean, not just what you say. For example, you can now say something like:

“Remind me to email him tomorrow after the team call.”

And Siri will piece together who “him” is based on your recent texts or calendar invites. It connects the dots from your messages, meetings, and notes—so you don’t have to spell everything out. This contextual awareness makes Siri feel less like a robot and more like a real assistant who knows your schedule and habits.

Natural, Flowing Conversations

Before, interacting with Siri felt like talking to a machine. Now, it feels more like chatting with a helpful colleague. You no longer need to say “Hey Siri” each time, and you don’t need to start from scratch with every command. You can follow up naturally:

You: “What’s the weather in London tomorrow?”

Siri: “It’ll be cloudy with light rain, around 18°C.”

You: “What about Manchester later in the week?”

Siri: “It’ll be sunnier by Friday, about 21°C.”

This natural conversation model is powered by Apple’s advanced on-device language understanding, ensuring fast, accurate, and human-like responses.

Device Intelligence and Personalization

Siri now operates as a true assistant across apps and devices. Ask it to find “the photo of Anna from last weekend” and it will scan your camera roll, understand who Anna is, and pick out the right image.

Or say, “Can you tidy up this email?” and Siri will suggest a rewrite in either a professional, friendly, or concise tone. It can even summarize long group chats, filter notifications intelligently, and pull up documents or notes on demand.

You no longer need to jump between apps—Siri now acts as a bridge across your digital life.

ChatGPT Integration for Complex Tasks

In a surprising but strategic move, Apple has partnered with OpenAI to give Siri access to ChatGPT (specifically GPT-4o), the same powerful model used by millions worldwide. When Siri detects a complex or open-ended query—like “Give me a summary of the plot of Dune” or “Suggest some meal ideas for a vegan dinner party”—it can silently call on ChatGPT behind the scenes to help you out.

Best of all, this feature is opt-in. Apple ensures full transparency: Siri will always ask for your permission before handing off your request to ChatGPT. This gives users the power of large-scale generative AI, without compromising their control or privacy.

Built-In Privacy: Apple’s Quiet Revolution

While Google’s Gemini and other AI assistants boast impressive capabilities, Apple is taking a different approach—one that UK users, in particular, appreciate: privacy-first intelligence.

Most AI features on the iPhone, including Siri’s enhancements, are powered directly on the device using Apple’s own silicon chips (like the A17 Pro and M-series chips). This means that your messages, photos, emails, and personal content never leave your iPhone during processing.

When Siri does need to reach out to the cloud—for example, to run a larger AI model or tap into ChatGPT—Apple uses a system called Private Cloud Compute. It’s one of the most innovative parts of the new Apple Intelligence suite.

2: Google Gemini – The New Brain Behind Android

While Apple is finally catching up with Siri, Google has been pushing the boundaries of AI for years, and in 2025, it’s clear they’re not slowing down. At the heart of that effort is Gemini—Google’s most advanced large language model to date.

Now fully powering Google Assistant across Android devices, Gemini isn’t just a new feature—it’s the core intelligence behind the Android experience. It replaces the older Google Assistant in everything from voice commands to smart suggestions, real-time multitasking, and even productivity tools.

If you’ve got a modern Pixel, Samsung Galaxy, or any recent flagship Android phone, you’ve probably already crossed paths with Gemini—and chances are, it’s quietly transformed how you use your device, whether you’ve noticed or not.

What Makes Gemini So Powerful?

Multimodal Understanding

Gemini can understand and respond to text, voice, images, and video all in one go. This is what truly sets it apart from earlier assistants. You’re no longer limited to just talking or typing—you can show Gemini something, and it will respond intelligently.

You can:

- Ask it to describe what’s in a photo (e.g. “What’s the name of this landmark?”)

- Share a screenshot of a confusing chart and ask, “What does this mean?”

- Record a voice memo and ask Gemini to turn it into meeting notes.

- Point your phone at a car dashboard warning light and get an instant explanation.

This fluid, human-like understanding of multiple formats makes Gemini feel like a real assistant, not just a tool.

Deep Google Integration

Because Gemini is built by Google, it integrates seamlessly with services you already use daily: Gmail, Calendar, Google Docs, Drive, YouTube, Google Maps, and even Search.

You can now ask it to:

- Summarize a long email chain and highlight the key points.

- Schedule a meeting with colleagues based on your availability.

- Generate a presentation from notes stored in Google Docs.

- Pull up YouTube videos related to your current task.

It also works within apps like Chrome, Messages, and Keep—so you’re never jumping between tabs or digging for information. Gemini brings everything together in a streamlined, intelligent way.

Real-Time Suggestions

Gemini doesn’t wait for you to ask—it anticipates your needs as you go. As you’re typing a reply in Gmail, it might suggest a full draft. If you’re booking tickets to Edinburgh, it could help you compare prices, find nearby hotels, or even add the itinerary to your calendar automatically.

It’s also context aware. If you’re working late, Gemini might suggest sending a “Heads up” message to your team. If you’re on the move, it could remind you to switch to battery saver mode or finish reading that article you started earlier.

Gemini is all about saving time, cutting out friction, and boosting efficiency—whether you’re working, travelling, or just catching up with mates.

Vision Capabilities

One of Gemini’s most unique features is how it transforms your camera into a smart assistant. It builds on the foundation of Google Lens but is far more advanced.

You can:

- Point your camera at a broken appliance and ask for possible fixes—Gemini will offer step-by-step solutions or connect you to a repair service.

- Scan your wardrobe and get outfit recommendations.

- Translate road signs, menus, or documents in real-time with better accuracy than ever.

- Use Gemini to read handwritten notes and convert them into digital text.

This blend of AI and visual recognition turns your phone into a true problem-solver—practical, fast, and incredibly helpful in everyday life.

Any Downsides?

While Gemini is undoubtedly powerful, there are trade-offs—and for many users, one of the biggest concerns is privacy.

Heavy Reliance on Cloud Computing

Unlike Apple’s on-device AI, Gemini performs much of its processing in the cloud. That means:

- Your queries often leave your phone behind and are handled on Google’s servers.

- This includes voice requests, documents, images, and even context from apps.

- While Google says data is anonymized and secured, it still means your information is travelling outside your device.

This can raise eyebrows for users who prefer stricter control over their data—especially under the UK’s data protection frameworks and GDPR-style policies.

You Need to Stay Online

Because Gemini depends on cloud connectivity, it won’t always work smoothly without an internet connection. While some basic functionality remains available offline, the most powerful features—like multimodal understanding and smart suggestions—require a stable connection.

If you’re somewhere with patchy signal or on limited data, Gemini can feel a bit less reliable than expected.

Battery and Performance Impact

While Google has worked hard to optimize Gemini’s power usage, running a cloud-powered assistant can still affect battery life—especially on older Android models. If Gemini is constantly scanning your activity to offer suggestions, it may drain more power than traditional assistants.

That said on newer phones like the Pixel 9 or Samsung S25 Ultra, this is far less noticeable thanks to AI-specific chips and better optimization.

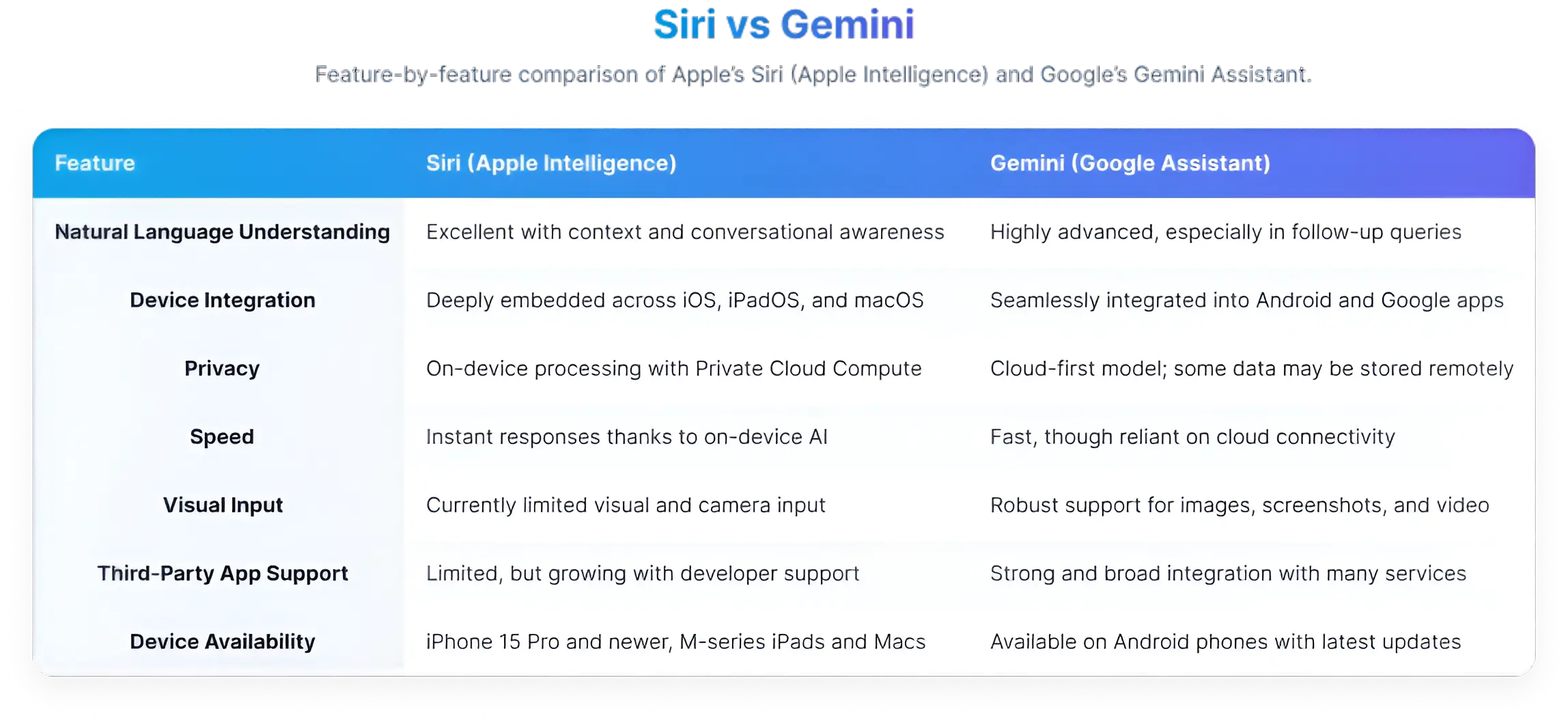

3: Siri vs. Gemini – Feature-by-Feature Breakdown

As Apple and Google race to build the smartest AI assistant, both Siri (powered by Apple Intelligence) and Gemini (the new Google Assistant) have their own strengths. Here’s a detailed breakdown of how they compare across key areas:

Natural Language Understanding

Siri has taken a massive leap forward with Apple Intelligence. It’s now much better at understanding context, so you don’t need to keep repeating yourself. For example, if you say, “Remind me to call her after the meeting,” Siri can figure out who “her” is from your messages or calendar.

Gemini is also very advanced in this area. It’s especially good at handling follow-up questions and more complex queries. If you start a conversation with Gemini, you can go back and forth naturally without needing to reset your command each time.

Device Integration

Siri is deeply integrated across Apple’s ecosystem, including iPhones, iPads, and Macs. It works closely with native apps like Mail, Calendar, Messages, and Photos, and it benefits from Apple’s signature polish and consistency.

On the other hand, Gemini is the brain behind Android, and it fits naturally into the Google ecosystem. It works brilliantly with apps like Gmail, Google Docs, Calendar, Maps, YouTube, and Search, creating a seamless experience for Android users.

Privacy and Data Handling

Apple takes a strong stance on privacy. Most Siri’s processing is done on your device, meaning your personal data stays local. When cloud access is needed, Apple uses something called Private Cloud Compute, which ensures that no data is stored or accessed—even by Apple itself.

Gemini, however, is cloud-first, meaning it relies on Google’s servers to process most of your requests. While this allows for more complex and powerful responses, it does mean that your data may be sent off-device, which some users may find concerning.

Speed and Performance

Thanks to on-device processing, Siri responds almost instantly in many cases. It feels fast, fluid, and responsive—especially on newer iPhones and Macs.

Gemini is also fast, but because it depends on cloud computing, a good internet connection is key. If your signal is weak or you’re offline, you might experience slower performance or limited functionality.

Visual and Multimodal Capabilities

When it comes to visual input, Gemini leads the way. You can point your phone camera at an object, and Gemini can describe it, translate text, or offer helpful info. It also handles screenshots, graphs, photos, and videos with ease.

Siri currently offers limited visual support. While it can search your photos or find screenshots, it doesn’t yet match Gemini’s ability to analyze or interpret visual content in real-time.

Support for Third-Party Apps

Siri’s ability to interact with third-party apps is improving but still limited. Apple is gradually opening things up to developers, but the experience can be hit-or-miss depending on the app.

Gemini is much more open. It works smoothly with a wide range of third-party services and apps—whether it’s productivity tools, shopping apps, or entertainment platforms. This gives it a clear edge if you rely on apps outside of Google’s core offerings.

Device Availability

Apple Intelligence is only available on select Apple devices, such as iPhone 15 Pro (and later), M-series iPads, and Macs. That means not all Apple users can access the new Siri features just yet.

Gemini is more widely available across modern Android phones—especially Google Pixel, Samsung Galaxy, and other high-end Android devices with the latest software updates. This makes it easier for a wider audience to try Gemini without needing the newest phone model.

4: Real-World Scenarios – Which AI Works Best for You?

Let’s bring it all down to real life. We’ve seen the specs and the features, but how do Siri and Gemini actually perform when it matters most? Here’s a look at how both assistants handle common everyday situations.

Holiday Planning Made Smarter

Planning a trip used to mean juggling a dozen apps, confirmation emails, and sticky notes. Now, Siri and Gemini aim to handle most of it for you.

Siri helps you keep your plans organized—finding flight details in your inbox, setting reminders for passport renewals, or building a rough itinerary in Apple Notes. It’s clean, private, and efficient.

Gemini, on the other hand, acts more like a full travel agent. It not only finds bookings in Gmail but also checks the weather at your destination, suggests what to pack, and offers hotel or flight recommendations in real time. You can even scan your passport with your camera and have Gemini warn you if it’s close to expiry. Need translations abroad? It’s already got you covered.

Verdict: Gemini feels more like a complete travel companion, thanks to its wider integration with Google services and live web results.

Powering Work and Business

If you’re self-employed or run a small business, your phone needs to be more than a device—it needs to work like a PA. This is where the assistants really stretch their legs.

Siri has become a solid productivity ally. You can ask it to rewrite emails in a more professional tone, summarize long messages, or remind you about that client call next week. It can even help draft social media captions or tidy up a note into something presentable.

But Gemini? It steps into full-blown office assistant territory. It can draft proposals in Google Docs, organize your calendar, generate presentations in Slides, and even analyze email threads to flag next steps. For business owners or freelancers already living in Google Workspace, this feels like magic.

Verdict: Gemini is the stronger tool for multitasking and content creation—especially for professionals using Google tools day in and day out.

Helping Students and Lifelong Learners

Whether you’re revising for A-levels or learning something new online, both assistants offer a big upgrade over simple web search.

Siri now helps you study smarter, summarizing long passages or answering academic questions with clarity. You can even ask it to explain something again in simpler terms or ask for follow-ups without repeating yourself.

Gemini goes further, especially with visuals. Snap a photo of a confusing graph or maths equation, and Gemini will walk you through the solution step-by-step. It can also create quick study guides, flashcards, and even test questions—making it a full-on revision buddy.

Verdict: Gemini wins this round for its ability to teach visually and interactively.

Running a Household or Family Schedule

Modern life is a juggling act—and AI can actually make things smoother.

Siri is reliable for everyday reminders, school runs, and managing lists. It syncs beautifully with shared calendars, and you can read out messages while you’re driving or cooking.

Gemini, though, handles a busier load. It can manage multiple calendars across your family, build dynamic shopping lists, suggest quick meal plans, and send reminders for birthdays, bills, or school events. It even offers live traffic alerts if you’re about to leave the house.

Verdict: Gemini handles the chaos of modern family life with more depth and flexibility.

Mental Health and Wellness Support

In an age of stress and burnout, wellness tools matter—and both assistants are getting involved.

Siri connects directly with Apple’s Health app. It can track habits, prompt mindfulness moments, and connect you to apps like Calm or Headspace. If you ask for support, it can offer breathing exercises or gently suggest taking a break.

Gemini takes it further. It can actively check in, offer journaling prompts, find YouTube meditations, or coach you through relaxation techniques. You can even tell it how your day’s going, and it responds like a supportive friend.

Verdict: While Siri is private and polished, Gemini feels more emotionally responsive and resourceful in this space.

Shopping, Browsing, and Deals

From browsing shoes to tracking your Amazon orders, AI is increasingly helping with everyday online shopping.

Siri can help launch websites, check order statuses through Mail, and suggest payment options through Apple Wallet. But it’s still mostly a helper rather than a lead.

Gemini, on the other hand, acts as a shopping assistant. Ask it to find the cheapest pair of trainers in your size, or track your order from ASOS, and it pulls the information from Gmail, compares prices, and even checks delivery dates for you. It’s particularly handy for frequent online shoppers.

Verdict: Gemini is far more practical for browsing, price comparisons, and deal hunting.

Privacy: The Key Difference

No matter how smart an assistant is, privacy remains a key issue—especially when your personal life is involved.

Apple’s Siri takes a clear lead here. Most requests are processed on your device. When cloud computing is needed, Apple’s “Private Cloud Compute” ensures your data isn’t stored or accessed—even by Apple itself.

Gemini is incredibly capable but relies heavily on Google’s cloud infrastructure. You can adjust your privacy settings, but your data still travels through Google’s servers for processing.

Verdict: For anyone who prioritizes privacy and local data processing, Siri’s approach is unmatched.

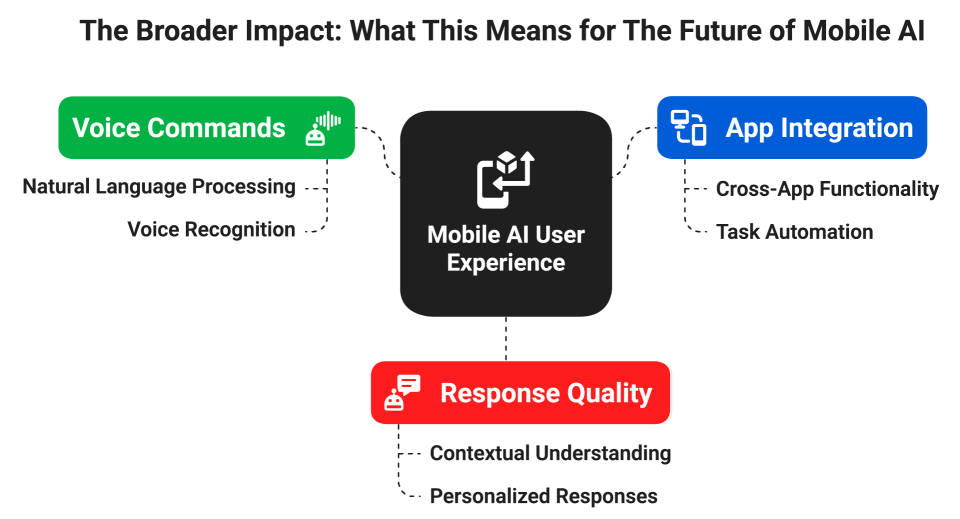

5: The Broader Impact: What This Means for The Future of Mobile AI

2025 isn’t just about shinier phones or faster processors. It’s about something far more meaningful — your phone becoming truly intelligent. No longer just a tool in your pocket, it’s now more like a partner that understands you, adapts to your needs, and helps you get through the day more effortlessly than ever before.

Apple and Google are pouring their energy into AI for a reason. They’re not just competing over who makes the better gadget — they’re shaping the way we live, work, and communicate. And with Apple Intelligence and Google Gemini, we’ve stepped into a new chapter where software matters more than specs.

We’re seeing a complete shift in user expectations — and honestly, it’s long overdue.

- No more barking out stiff voice commands like it’s 2012. You speak naturally, and your phone knows what you mean — even if you’re half-talking, half-thinking.

- No more juggling five apps to get one task done. AI now bridges your calendar, emails, messages, and docs in seconds — because you don’t have time to be your own assistant.

- No more flat, generic answers. These new assistants respond with awareness of your mood, your plans, and your tone — whether you’re writing a formal message to your boss or a cheeky text to your mate.

But here’s the real win: it’s not just smarter than tech — it’s more human.

Apple Intelligence keeps your privacy at the core, doing its thinking right on your device. You never feel like you’re trading your data for convenience. It’s tidy, secure, and brilliantly helpful — like an assistant who respects boundaries.

Gemini, meanwhile, is bold, clever, and incredibly capable — ready to answer almost anything, process anything, and help with everything from business proposals to broken washing machines. If you live inside Google’s world, Gemini feels like it knows your digital life inside out.

Conclusion

As Apple and Google push their AI frontiers with Siri and Gemini, one thing is clear: the way we use smartphones is fundamentally changing. It’s no longer just about faster chips or better cameras—it’s about having an assistant that truly understands you. Whether you value Apple’s privacy-first, on-device intelligence or Google’s feature-rich, cloud-powered versatility, the future of mobile is undeniably smarter, more helpful, and more human. In 2025, your phone isn’t just smart—it’s your partner.

Frequently Asked Questions

Apple’s latest AI upgrade for Siri focuses on enhancing contextual understanding, allowing the assistant to comprehend user intent more accurately. It also emphasizes on-device processing, which improves speed while keeping user data private. Additionally, proactive suggestions help users accomplish tasks faster by anticipating their needs based on routines and usage patterns.

Gemini is Google’s next-generation AI integrated into smartphones, designed to provide a highly intelligent and conversational user experience. It leverages multimodal understanding, meaning it can process text, voice, and images simultaneously. Gemini also delivers real-time contextual insights, helping users manage tasks, retrieve information, and interact with their devices more naturally.

Siri is designed with a focus on privacy, offline AI capabilities, and seamless integration within the iOS ecosystem. Gemini, on the other hand, is built for cross-platform intelligence, tapping into the broader Google ecosystem and deep web knowledge. While Siri prioritizes user privacy and personalization, Gemini excels in multimodal interactions and providing broader contextual understanding.

Yes, both AI systems are moving beyond basic voice command functions. They now act as anticipatory assistants, predicting user needs and offering proactive solutions. This evolution allows smartphones to perform complex tasks like scheduling, summarizing information, or managing multiple apps without constant manual input.

The upgrades have significantly enhanced the way users interact with their devices. Users now experience smarter suggestions, smoother multitasking, and more accurate contextual assistance. These improvements also lead to a more personalized and intuitive experience, making smartphones an even more indispensable part of daily life.

Gemini is primarily integrated into Google’s Pixel devices, serving as the flagship AI experience. Over time, Google has extended support to other Android-powered smartphones through software updates. This approach allows a wider audience to benefit from advanced AI features without needing the latest hardware.

Apple prioritizes privacy by processing most Siri interactions directly on the device, minimizing cloud exposure. Google, while cloud-based, uses encrypted processing and transparency tools to safeguard user data. Both companies continue to enhance security protocols to ensure sensitive information remains protected while providing advanced AI capabilities.

Both Siri and Gemini have improved their ability to manage multiple tasks simultaneously. They can switch between apps, summarize information, and perform complex operations without repeated instructions. This makes it easier for users to complete workflows efficiently, saving time and effort while interacting with their smartphones.

Yes, app developers need to adapt to AI-driven functionalities. Integrating voice interactions, context-aware features, and AI-assisted workflows will become essential. This shift encourages developers to create smarter, more intuitive apps that leverage the predictive and multitasking capabilities of modern AI assistants.

The future points toward deeper integration with daily life, where AI assistants anticipate user needs and provide predictive automation. Cross-device intelligence will allow seamless experiences across smartphones, tablets, and other connected devices. As AI evolves, it will transform smartphones into proactive digital partners rather than simple reactive tools.